Introduction to NewWave

Bioc 2021: 4-6 August

Workshop Description

The fast development of single cell sequencing technologies in the recent years has generated a gap between the throughput of the experiments and the capability of analizing these generated data. In this package, we implement mini-batch stochastic gradient descent and a framework that allow to work with HDF5 files. We decided to use a negative binomial model following the observation that droplet sequencing technologies do not induce zero inflation in the data. Thanks to these improvements and the possibility to massively parallelize the estimation process using PSOCK clusters, we are able to speed up the computation.

Introduction

NewWave assumes a negative binomial distribution where the mean parameter is related to each single observation while the dispersion parameter can be equal for all observation or related to each single gene.

\[Y_{ij} \sim NB(\mu_{ij},\theta_j)\] And this is how the parameters are estimated:

\[\ln(\mu_{ij}) = \Big(X\beta + (V\gamma)^T + W\alpha \Big)_{ij} \]

- \(X\) represents cells related information such as batch-effect and \(\beta\) the related parameters

- \(V\) represents genes level information such as GC content and \(\gamma\) the related parameters

- \(W\) represent low dimensional representation and \(\alpha\) is the matrix of the loadings

NewWave is designed for estimate \(\beta\), \(\gamma\),\(W\) and \(\alpha\) but only \(W\) and \(\alpha\) are mandatory, it could be used for dimensional reduction only.

\[\ln(\theta_j) = \zeta_j\] These are the steps used for estimate parameters :

- Initialization of regression parameters parameters using ridge regression, and low-rank rapresentation with PCA.

- Optimization of dispersion parameters, if genewise massively parallelizable.

- Optimization of the cell-related parameters, using MLE, massively parallelizable.

- Optimization of the gene-related parameters, using MLE, massive parallelizable.

While the initialization is done only once at the beginnig of the process the other three steps are done iteratively till convergence.

In order to reduce the memory consumption it uses a PSOCK cluster combined with the R package SharedObject that allow to share a matrix between different cores avoiding memory duplication. Thanks to that we can massively parallelize the estimation process with huge benefit in terms of time consumption. We can reduce even more the time consumption using some mini batch approaches on the different steps of the optimization.

I am going to show how to use NewWave with example data generated with Splatter.

Splatter is a R package designed to generate single cell transcriptomic data with a high level of customization.

In our implementation we want a batch effect to be corrected, the additionals batch parameters are setted in order to create more complex batch effect.

You can think to the group as a cell type, we will use those group to test the resulted low dimensional representation through a cluster analysis.

suppressPackageStartupMessages(

{library(SingleCellExperiment)

library(splatter)

library(irlba)

library(Rtsne)

library(ggplot2)

library(mclust)

library(NewWave)

})

params <- newSplatParams()

N=1000

data <- splatSimulateGroups(params,batchCells=c(N/2,N/2),

group.prob = rep(0.1,10),

de.prob = 0.2,

batch.facLoc = 2,

batch.facScale = 1,

verbose = FALSE) The resault of splatter is a SingleCellExperiment with the generated data in the assays counts.

data## class: SingleCellExperiment

## dim: 10000 1000

## metadata(1): Params

## assays(6): BatchCellMeans BaseCellMeans ... TrueCounts counts

## rownames(10000): Gene1 Gene2 ... Gene9999 Gene10000

## rowData names(16): Gene BaseGeneMean ... DEFacGroup9 DEFacGroup10

## colnames(1000): Cell1 Cell2 ... Cell999 Cell1000

## colData names(4): Cell Batch Group ExpLibSize

## reducedDimNames(0):

## mainExpName: NULL

## altExpNames(0):## [1] "matrix" "array"As you can see we have the number of cell that we choose(N=500) an 10 000 genes. For this workshop we will choose only the 500 most variable genes.

hvg <- rowVars(counts(data))

names(hvg) <- rownames(counts(data))

data <- data[names(sort(hvg,decreasing=TRUE))[1:500],]In a SingleCellExperiment the colData slot contains the sample level metadata. In our situation there are two important variable, Batch and Group.

colData(data)## DataFrame with 1000 rows and 4 columns

## Cell Batch Group ExpLibSize

## <character> <character> <factor> <numeric>

## Cell1 Cell1 Batch1 Group6 57952.5

## Cell2 Cell2 Batch1 Group5 57381.8

## Cell3 Cell3 Batch1 Group8 71355.3

## Cell4 Cell4 Batch1 Group10 46996.7

## Cell5 Cell5 Batch1 Group3 44746.5

## ... ... ... ... ...

## Cell996 Cell996 Batch2 Group9 45118.0

## Cell997 Cell997 Batch2 Group3 42245.2

## Cell998 Cell998 Batch2 Group4 66518.3

## Cell999 Cell999 Batch2 Group4 65121.5

## Cell1000 Cell1000 Batch2 Group8 71415.2IMPORTANT: For batch effect removal the batch variable must be a factor

data$Batch <- as.factor(data$Batch)NewWave takes as input raw data, not normalized. So now we have all the object needed to start.

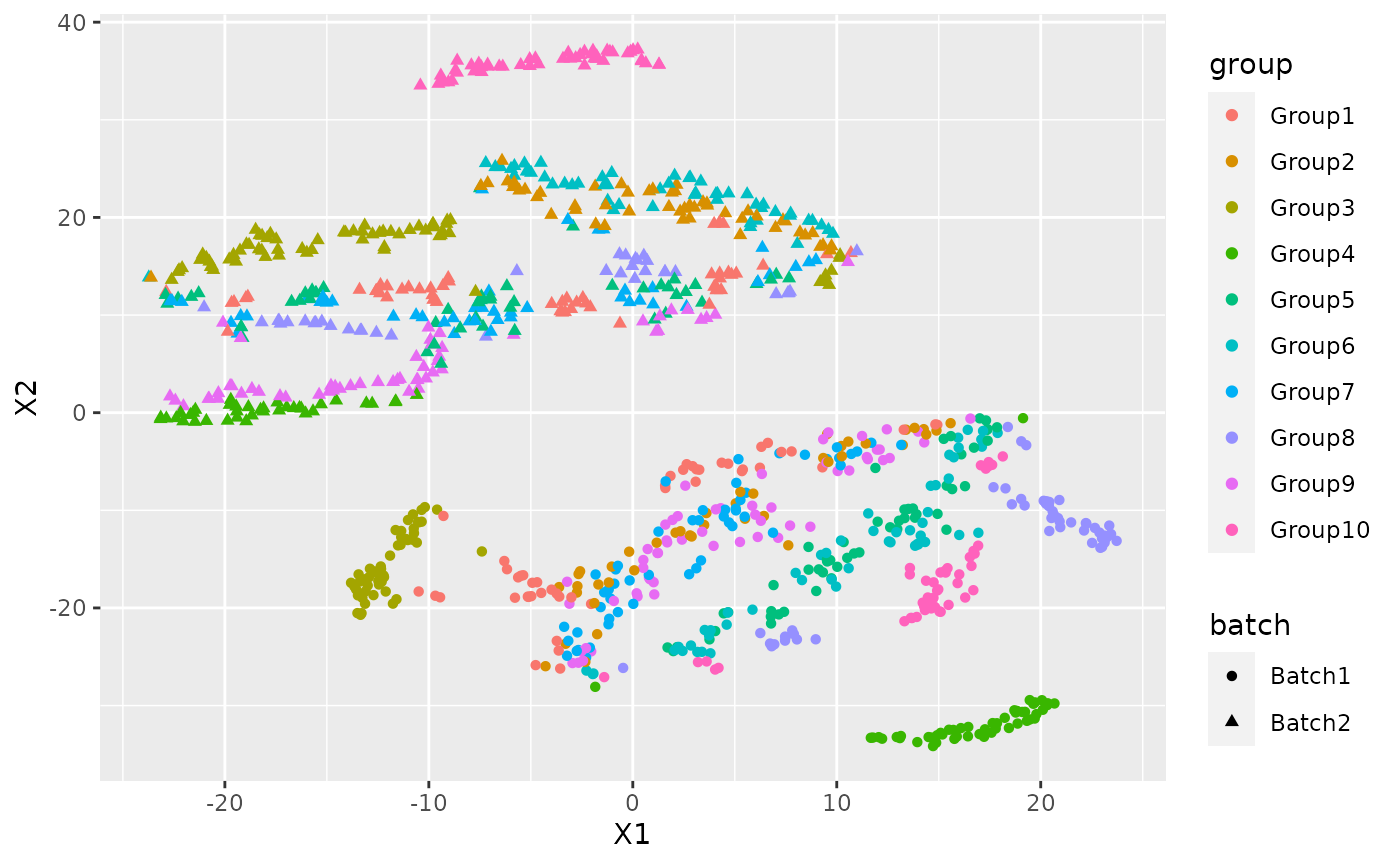

We can see the how the cells are distributed between group and batch.

pca <- prcomp_irlba(t(counts(data)),n=10)

plot_data <-data.frame(Rtsne(pca$x)$Y)

plot_data$batch <- data$Batch

plot_data$group <- data$Group

ggplot(plot_data, aes(x=X1,y=X2,col=group, shape=batch))+ geom_point()

Just for a later comparison we can see the performance of clustering on PCA low dimensional representation.

cluster <- kmeans(pca$x, 10)

adjustedRandIndex(cluster$cluster, data$Group)## [1] 0.03827329There is a clear batch effect between the cells.

Let’s try to correct it.

NewWave

I am going to show different implementation and the suggested way to use them with the given hardware.

Some basic advise:

- Verbose option has default FALSE, in this vignette I will change it for explanatory intentions, don’t do it with big dataset because it can sensibly slower the computation

- There are no concern about the dimension of mini-batches, I always used the 10% of the observations

Standard usage

I am going to present the stadard implementation of NewWave, it uses 1 cores, it estimates a dispersion parameters equal for all observation and uses all the observation in the estimation process.

The K parameter represents the number of dimension of the latent rapresentation.

The X parameter is used to indicates which variable represent the batch effect, in the same way can be inserted other cell-related variable and if you need some gene related variable those can be inserted in V.

res <- newWave(data, X = "~Batch", K=10, verbose = TRUE)## Time of setup

## user system elapsed

## 0.011 0.001 0.319

## Time of initialization

## user system elapsed

## 0.054 0.016 0.912## Iteration 1## penalized log-likelihood = -2037443.32678582## Time of dispersion optimization

## user system elapsed

## 1.087 0.047 1.123## after optimize dispersion = -1761735.2486796## Time of right optimization

## user system elapsed

## 0.001 0.000 12.931## after right optimization= -1712568.56031997## after orthogonalization = -1712564.39471734## Time of left optimization

## user system elapsed

## 0.007 0.004 13.336## after left optimization= -1706344.70711118## after orthogonalization = -1706344.11417023## Iteration 2## penalized log-likelihood = -1706344.11417023## Time of dispersion optimization

## user system elapsed

## 1.323 0.058 1.261## after optimize dispersion = -1705180.42919638## Time of right optimization

## user system elapsed

## 0.001 0.000 13.869## after right optimization= -1704625.65147708## after orthogonalization = -1704625.58877604## Time of left optimization

## user system elapsed

## 0.009 0.020 14.511## after left optimization= -1704412.41920802## after orthogonalization = -1704412.40261406## Iteration 3## penalized log-likelihood = -1704412.40261406## Time of dispersion optimization

## user system elapsed

## 1.265 0.072 1.216## after optimize dispersion = -1704408.58269685## Time of right optimization

## user system elapsed

## 0.001 0.000 11.999## after right optimization= -1704304.35907432## after orthogonalization = -1704304.35291608## Time of left optimization

## user system elapsed

## 0.025 0.012 14.008## after left optimization= -1704239.65815069## after orthogonalization = -1704239.65752193## Iteration 4## penalized log-likelihood = -1704239.65752193## Time of dispersion optimization

## user system elapsed

## 1.278 0.047 1.205## after optimize dispersion = -1704239.52819119## Time of right optimization

## user system elapsed

## 0.002 0.000 11.137## after right optimization= -1704191.26044754## after orthogonalization = -1704191.25733056## Time of left optimization

## user system elapsed

## 0.024 0.009 13.215## after left optimization= -1704154.99833729## after orthogonalization = -1704154.99790263In order to make it faster you can increase the number of cores using children parameter:

res2 <- newWave(data,X = "~Batch", K=10, verbose = TRUE, children=10)## Time of setup

## user system elapsed

## 0.040 0.000 1.711

## Time of initialization

## user system elapsed

## 0.143 0.020 1.184## Iteration 1## penalized log-likelihood = -2037443.32678783## Time of dispersion optimization

## user system elapsed

## 1.092 0.014 1.075## after optimize dispersion = -1761735.24876843## Time of right optimization

## user system elapsed

## 0.005 0.001 16.086## after right optimization= -1712568.56030678## after orthogonalization = -1712564.39470413## Time of left optimization

## user system elapsed

## 0.018 0.020 7.733## after left optimization= -1706344.70720138## after orthogonalization = -1706344.11426006## Iteration 2## penalized log-likelihood = -1706344.11426006## Time of dispersion optimization

## user system elapsed

## 1.370 0.038 1.288## after optimize dispersion = -1705180.42927138## Time of right optimization

## user system elapsed

## 0.006 0.001 17.422## after right optimization= -1704625.65126147## after orthogonalization = -1704625.58856274## Time of left optimization

## user system elapsed

## 0.002 0.004 7.889## after left optimization= -1704412.43087219## after orthogonalization = -1704412.41426885## Iteration 3## penalized log-likelihood = -1704412.41426885## Time of dispersion optimization

## user system elapsed

## 1.357 0.057 1.295## after optimize dispersion = -1704408.59452583## Time of right optimization

## user system elapsed

## 0.002 0.004 15.059## after right optimization= -1704304.38471573## after orthogonalization = -1704304.37854646## Time of left optimization

## user system elapsed

## 0.021 0.016 7.882## after left optimization= -1704239.6106561## after orthogonalization = -1704239.61004001## Iteration 4## penalized log-likelihood = -1704239.61004001## Time of dispersion optimization

## user system elapsed

## 1.295 0.091 1.269## after optimize dispersion = -1704239.4804606## Time of right optimization

## user system elapsed

## 0.002 0.004 14.358## after right optimization= -1704191.0961776## after orthogonalization = -1704191.09307776## Time of left optimization

## user system elapsed

## 0.007 0.009 7.979## after left optimization= -1704154.60600656## after orthogonalization = -1704154.60558375Commonwise dispersion and minibatch approaches

If you do not have an high number of cores to run newWave this is the fastest way to run.

Each of these three steps can be accelerated using mini batch, the number of observation is settled with these parameters:

- n_gene_disp : Number of genes to use in the dispersion optimization

- n_cell_par : Number of cells to use in the cells related parameters optimization

- n_gene_par : Number of genes to use in the genes related parameters optimization

res3 <- newWave(data,X = "~Batch", verbose = TRUE,K=10, children=2,

n_gene_disp = 100, n_gene_par = 100, n_cell_par = 100)## Time of setup

## user system elapsed

## 0.009 0.004 0.311

## Time of initialization

## user system elapsed

## 0.057 0.009 0.647## Iteration 1## penalized log-likelihood = -2037443.32678676## Time of dispersion optimization

## user system elapsed

## 1.103 0.030 1.098## after optimize dispersion = -1761735.2486814## Time of right optimization

## user system elapsed

## 0.001 0.000 13.018## after right optimization= -1712568.56031708## after orthogonalization = -1712564.39471445## Time of left optimization

## user system elapsed

## 0.008 0.004 7.121## after left optimization= -1706344.70711352## after orthogonalization = -1706344.11417257## Iteration 2## penalized log-likelihood = -1706344.11417257## Time of dispersion optimization

## user system elapsed

## 0.44 0.08 0.40## after optimize dispersion = -1705180.8522719## Time of right optimization

## user system elapsed

## 0.002 0.000 2.998## after right optimization= -1705073.81199177## after orthogonalization = -1705073.80295869## Time of left optimization

## user system elapsed

## 0.011 0.009 0.727## after left optimization= -1705068.56037081## after orthogonalization = -1705068.56012733## Iteration 3## penalized log-likelihood = -1705068.56012733## Time of dispersion optimization

## user system elapsed

## 0.499 0.058 0.437## after optimize dispersion = -1705068.56012733## Time of right optimization

## user system elapsed

## 0.000 0.002 2.707## after right optimization= -1705004.17286702## after orthogonalization = -1705004.16856## Time of left optimization

## user system elapsed

## 0.017 0.021 0.686## after left optimization= -1704997.50782022## after orthogonalization = -1704997.50744803Genewise dispersion mini-batch

If you have a lot of core disposable or you want to estimate a genewise dispersion parameter this is the fastest configuration:

IMPORTANT:do not use n_gene_disp in this case, it will slower the computation.

res3 <- newWave(data,X = "~Batch", verbose = TRUE,K=10, children=10,

n_gene_par = 100, n_cell_par = 100, commondispersion = FALSE)## Time of setup

## user system elapsed

## 0.031 0.000 1.692

## Time of initialization

## user system elapsed

## 0.113 0.021 1.123## Iteration 1## penalized log-likelihood = -2037443.32678603## Time of dispersion optimization

## user system elapsed

## 1.099 0.020 1.092## after optimize dispersion = -1761735.24867292## Time of right optimization

## user system elapsed

## 0.006 0.000 16.400## after right optimization= -1712568.5603126## after orthogonalization = -1712564.39470996## Time of left optimization

## user system elapsed

## 0.013 0.000 8.006## after left optimization= -1706344.70711143## after orthogonalization = -1706344.11417048## Iteration 2## penalized log-likelihood = -1706344.11417048## Time of dispersion optimization

## user system elapsed

## 0.147 0.040 0.975## after optimize dispersion = -1699482.9629962## Time of right optimization

## user system elapsed

## 0.006 0.000 3.727## after right optimization= -1699382.58869902## after orthogonalization = -1699382.57425098## Time of left optimization

## user system elapsed

## 0.003 0.008 0.794## after left optimization= -1699336.03400781## after orthogonalization = -1699336.03341578## Iteration 3## penalized log-likelihood = -1699336.03341578## Time of dispersion optimization

## user system elapsed

## 0.178 0.066 0.498## after optimize dispersion = -1699336.25979416## Time of right optimization

## user system elapsed

## 0.006 0.000 3.513## after right optimization= -1699265.6527594## after orthogonalization = -1699265.64829732## Time of left optimization

## user system elapsed

## 0.007 0.017 0.877## after left optimization= -1699222.01471638## after orthogonalization = -1699222.01423501This way will store the latent representation in the reducedDim slot.

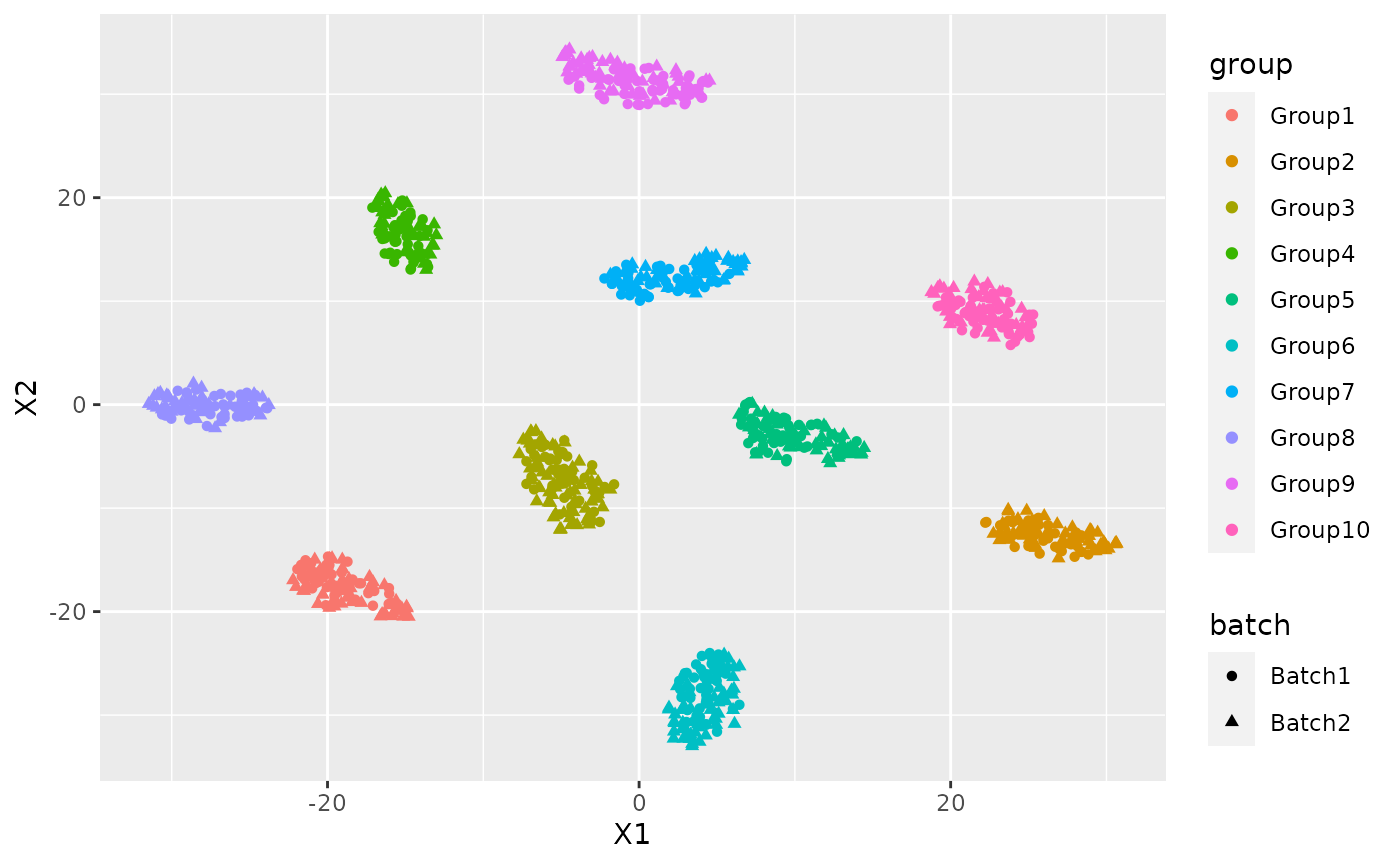

Now I can use the latent dimension representation for visualization purpose:

latent <- reducedDim(res)

tsne_latent <- data.frame(Rtsne(latent)$Y)

tsne_latent$batch <- data$Batch

tsne_latent$group <- data$Group

ggplot(tsne_latent, aes(x=X1,y=X2,col=group, shape=batch))+ geom_point()

or for clustering:

cluster <- kmeans(latent, 10)

adjustedRandIndex(cluster$cluster, data$Group)## [1] 1if we want to obtain more information about the model we can run newFit that has exactly the same parameters

par <- newFit(data,X = "~Batch", verbose = TRUE,K=10, children=10,

n_gene_par = 100, n_cell_par = 100, commondispersion = FALSE)## Time of setup

## user system elapsed

## 0.030 0.000 1.772

## Time of initialization

## user system elapsed

## 0.125 0.029 1.171## Iteration 1## penalized log-likelihood = -2037443.326783## Time of dispersion optimization

## user system elapsed

## 1.057 0.003 1.034## after optimize dispersion = -1761735.24865698## Time of right optimization

## user system elapsed

## 0.006 0.000 16.473## after right optimization= -1712568.56034132## after orthogonalization = -1712564.39473892## Time of left optimization

## user system elapsed

## 0.008 0.008 7.715## after left optimization= -1706344.70714661## after orthogonalization = -1706344.11420646## Iteration 2## penalized log-likelihood = -1706344.11420646## Time of dispersion optimization

## user system elapsed

## 0.157 0.049 0.986## after optimize dispersion = -1699482.96432227## Time of right optimization

## user system elapsed

## 0.007 0.000 3.617## after right optimization= -1699396.36354721## after orthogonalization = -1699396.35864513## Time of left optimization

## user system elapsed

## 0.010 0.009 0.885## after left optimization= -1699350.23676768## after orthogonalization = -1699350.23614003## Iteration 3## penalized log-likelihood = -1699350.23614003## Time of dispersion optimization

## user system elapsed

## 0.165 0.075 0.487## after optimize dispersion = -1699350.45898798## Time of right optimization

## user system elapsed

## 0.006 0.000 4.044## after right optimization= -1699271.92893419## after orthogonalization = -1699271.92377## Time of left optimization

## user system elapsed

## 0.013 0.008 0.830## after left optimization= -1699230.30301452## after orthogonalization = -1699230.30236034The result of newFit is an object of class newmodel where are stored all the estimated parameter seen in the introduction.

# View(par)With this object we can also compute the AIC and the BIC of the model.

## [1] 3433766## [1] 3541737NewWave on DelayedArray

In this package we can find a huge single cell dataset made by 1.3 M cell and 27 K genes.

Thanks to the hdf5 format that we can use trougth the DelayedArray framework this dataset is not on our RAM but it can be read and used anyway.

suppressPackageStartupMessages(library(TENxBrainData))

tenx <- TENxBrainData()## snapshotDate(): 2021-08-04## see ?TENxBrainData and browseVignettes('TENxBrainData') for documentation## downloading 1 resources## retrieving 1 resource## loading from cacheLet’s see how is made this SingleCellExperiment

tenx## class: SingleCellExperiment

## dim: 27998 1306127

## metadata(0):

## assays(1): counts

## rownames: NULL

## rowData names(2): Ensembl Symbol

## colnames(1306127): AAACCTGAGATAGGAG-1 AAACCTGAGCGGCTTC-1 ...

## TTTGTCAGTTAAAGTG-133 TTTGTCATCTGAAAGA-133

## colData names(4): Barcode Sequence Library Mouse

## reducedDimNames(0):

## mainExpName: NULL

## altExpNames(0):It has the slot counts were the date are stored exaclty how is needed by NewWave but in this case we do not have the batch effect and so we will only reduce the dimensionality of the dataset.

Despite of the huge dimension of the dataset it’s memory size is really small:

object.size(tenx)## 304126712 bytesonly 304 MB.

This is possible because the counts slot is not a matrix.

## [1] "DelayedMatrix"

## attr(,"package")

## [1] "DelayedArray"In order to manage this type of file NewWave uses a set of proper method with some strength and some weakness.

I am not going to show the exact implementation but I will explain how to use NewWave in this situation and why.

The biggest strength of using DelayedArray and HDF5 files is the reduction of the RAM consumption, the cost of that is that we read only a small piece, called chunck, of the dataset every time and so if it is done not properly in can strongly slower the computation especially when it is applied to an iterative optimization such as the one used by NewWave.

For this reason I strongly discourage to set verbose = TRUE, this will force NewWave to read the dataset, chunk by chunk, several time for each iteration.

This implementation is really effective only when applied on big dataset, let’s say at least 30K cell.

The are some differences with the matrix implementation: * there is a small approximation in the initialization * the estimation of the dispersion parameter is forced to be genewise

The best way to use NewWave in this case is the same we see before but I will not run this code because it would be long.

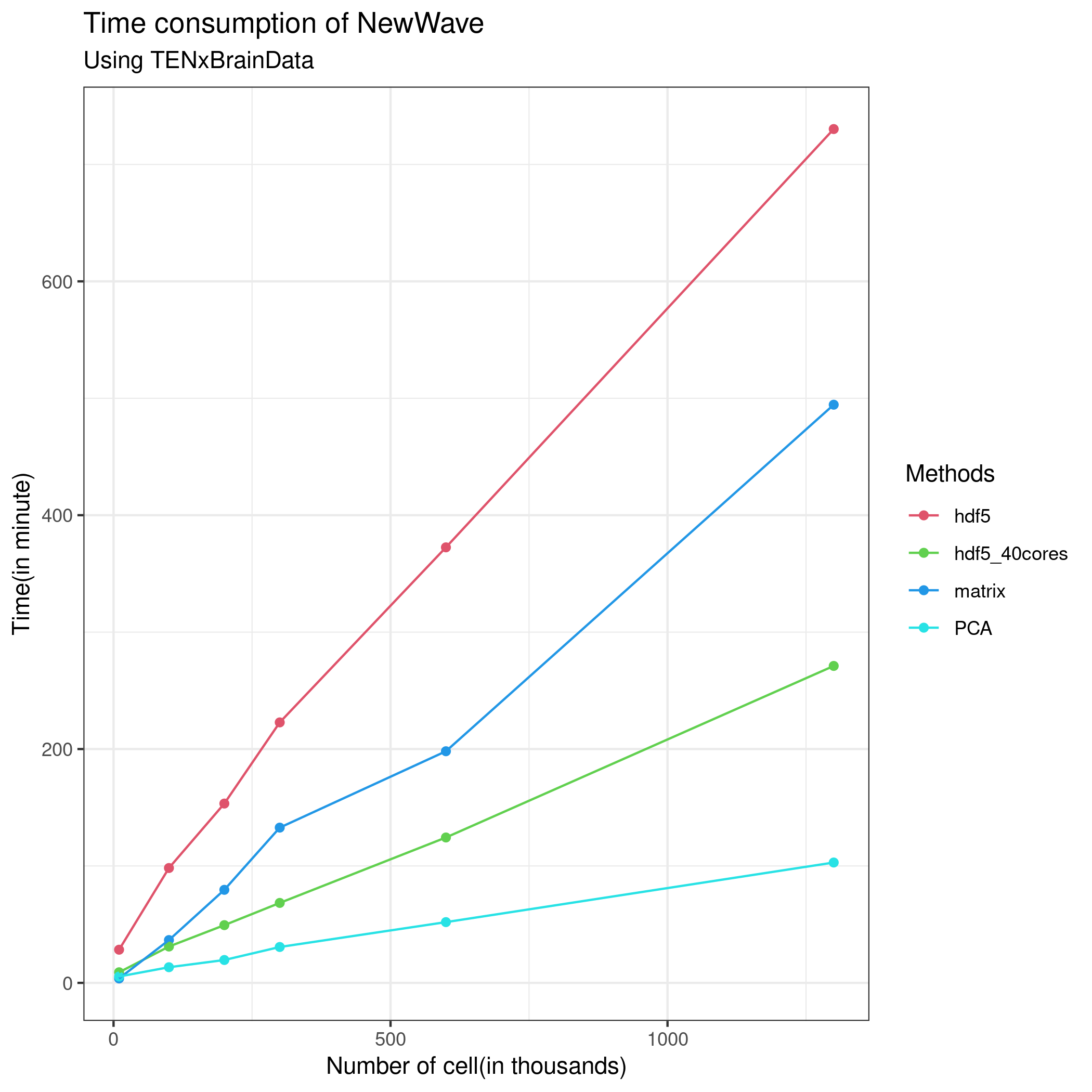

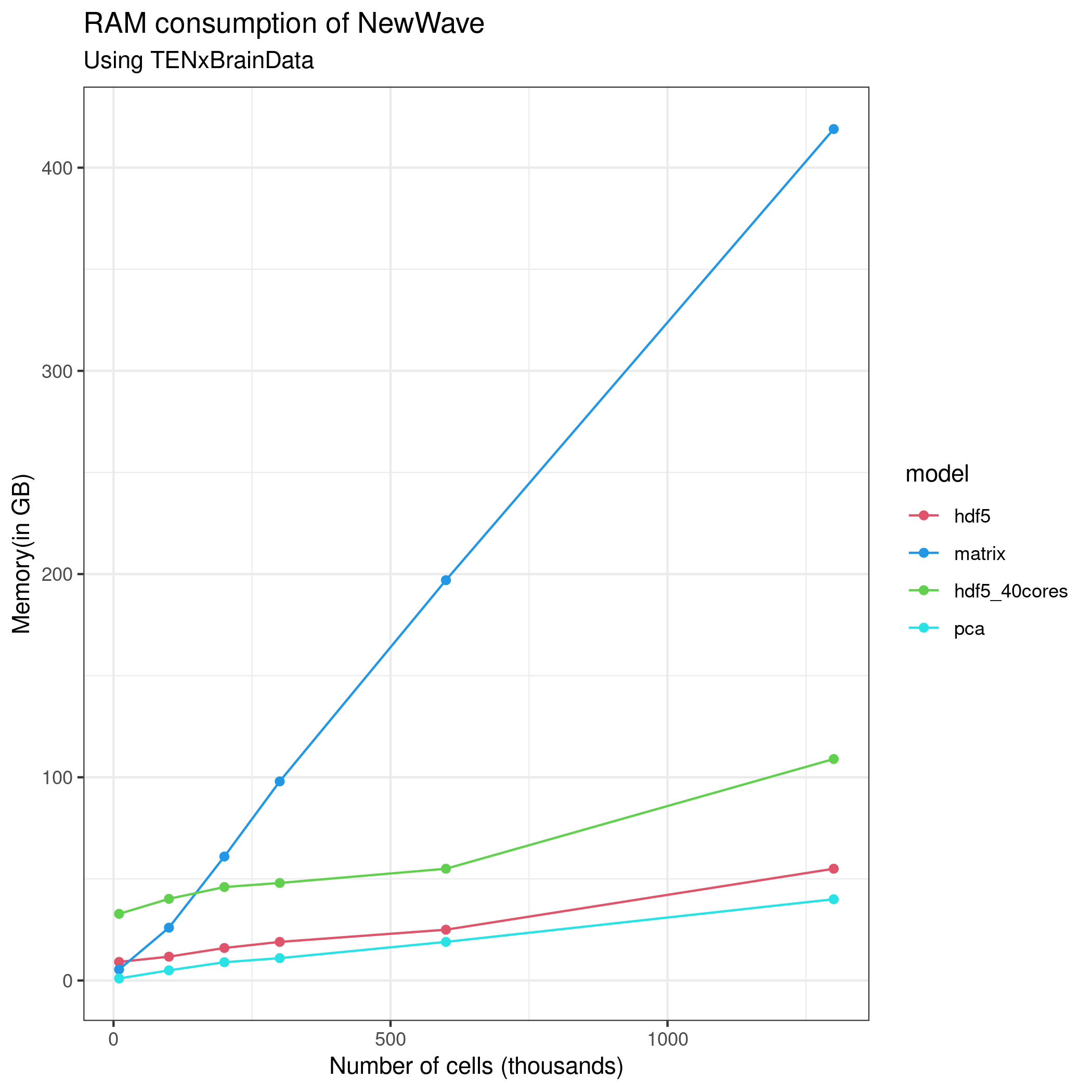

# res4 <- newWave(tenx[1:1000,1:1000], K=10, children=2,n_gene_par = 100, n_cell_par = 100, commondispersion = FALSE, verbose=T )The following figures explain the performance of NewWave in this dataset with differents approach, I have always used only the 1000 most variable genes.

Reference

The article that describes all the results obtained comparing differents implememntation is now available on biorxiv : https://www.biorxiv.org/content/10.1101/2021.08.02.453487v1

Session Information

## R version 4.1.0 (2021-05-18)

## Platform: x86_64-pc-linux-gnu (64-bit)

## Running under: Ubuntu 20.04.2 LTS

##

## Matrix products: default

## BLAS/LAPACK: /usr/lib/x86_64-linux-gnu/openblas-pthread/libopenblasp-r0.3.8.so

##

## locale:

## [1] LC_CTYPE=en_US.UTF-8 LC_NUMERIC=C

## [3] LC_TIME=en_US.UTF-8 LC_COLLATE=en_US.UTF-8

## [5] LC_MONETARY=en_US.UTF-8 LC_MESSAGES=C

## [7] LC_PAPER=en_US.UTF-8 LC_NAME=C

## [9] LC_ADDRESS=C LC_TELEPHONE=C

## [11] LC_MEASUREMENT=en_US.UTF-8 LC_IDENTIFICATION=C

##

## attached base packages:

## [1] stats4 stats graphics grDevices utils datasets methods

## [8] base

##

## other attached packages:

## [1] TENxBrainData_1.13.1 HDF5Array_1.21.0

## [3] rhdf5_2.37.0 DelayedArray_0.19.1

## [5] NewWave_1.3.0 mclust_5.4.7

## [7] ggplot2_3.3.5 Rtsne_0.15

## [9] irlba_2.3.3 Matrix_1.3-4

## [11] splatter_1.17.1 SingleCellExperiment_1.15.1

## [13] SummarizedExperiment_1.23.1 Biobase_2.53.0

## [15] GenomicRanges_1.45.0 GenomeInfoDb_1.29.3

## [17] IRanges_2.27.0 S4Vectors_0.31.0

## [19] BiocGenerics_0.39.1 MatrixGenerics_1.5.2

## [21] matrixStats_0.60.0

##

## loaded via a namespace (and not attached):

## [1] colorspace_2.0-2 ellipsis_0.3.2

## [3] rprojroot_2.0.2 XVector_0.33.0

## [5] fs_1.5.0 farver_2.1.0

## [7] bit64_4.0.5 interactiveDisplayBase_1.31.2

## [9] AnnotationDbi_1.55.1 fansi_0.5.0

## [11] cachem_1.0.5 knitr_1.33

## [13] jsonlite_1.7.2 dbplyr_2.1.1

## [15] png_0.1-7 shiny_1.6.0

## [17] BiocManager_1.30.16 compiler_4.1.0

## [19] httr_1.4.2 backports_1.2.1

## [21] assertthat_0.2.1 fastmap_1.1.0

## [23] later_1.2.0 BiocSingular_1.9.1

## [25] htmltools_0.5.1.1 tools_4.1.0

## [27] rsvd_1.0.5 gtable_0.3.0

## [29] glue_1.4.2 GenomeInfoDbData_1.2.6

## [31] dplyr_1.0.7 rappdirs_0.3.3

## [33] Rcpp_1.0.7 jquerylib_0.1.4

## [35] pkgdown_1.6.1 Biostrings_2.61.2

## [37] vctrs_0.3.8 rhdf5filters_1.5.0

## [39] ExperimentHub_2.1.4 xfun_0.24

## [41] stringr_1.4.0 beachmat_2.9.0

## [43] mime_0.11 lifecycle_1.0.0

## [45] AnnotationHub_3.1.4 zlibbioc_1.39.0

## [47] scales_1.1.1 ragg_1.1.3

## [49] promises_1.2.0.1 parallel_4.1.0

## [51] yaml_2.2.1 curl_4.3.2

## [53] memoise_2.0.0 sass_0.4.0

## [55] stringi_1.7.3 RSQLite_2.2.7

## [57] BiocVersion_3.14.0 highr_0.9

## [59] desc_1.3.0 ScaledMatrix_1.1.0

## [61] checkmate_2.0.0 filelock_1.0.2

## [63] BiocParallel_1.27.2 rlang_0.4.11

## [65] pkgconfig_2.0.3 systemfonts_1.0.2

## [67] bitops_1.0-7 evaluate_0.14

## [69] lattice_0.20-44 purrr_0.3.4

## [71] Rhdf5lib_1.15.2 labeling_0.4.2

## [73] bit_4.0.4 tidyselect_1.1.1

## [75] magrittr_2.0.1 R6_2.5.0

## [77] generics_0.1.0 DBI_1.1.1

## [79] pillar_1.6.2 withr_2.4.2

## [81] SharedObject_1.7.0 KEGGREST_1.33.0

## [83] RCurl_1.98-1.3 tibble_3.1.3

## [85] crayon_1.4.1 utf8_1.2.2

## [87] BiocFileCache_2.1.1 rmarkdown_2.9

## [89] locfit_1.5-9.4 grid_4.1.0

## [91] blob_1.2.2 digest_0.6.27

## [93] xtable_1.8-4 httpuv_1.6.1

## [95] textshaping_0.3.5 munsell_0.5.0

## [97] bslib_0.2.5.1